The first post in this series made it clear that the major computational and storage costs to implementing the Gauss-Newton algorithm come in computing the matrices and

. By carefully avoiding materialization of the Jacobian matrix and the residual vector, the second post gave us a viable sphere fitting algorithm that can be used to calibrate common MEMS accelerometers (e.g. ADXL335, ADXL345) and magnetometers (e.g. HMC5843). This algorithm still requires multiple passes over the observation data, and that is only possible if all of those observations are stored somewhere; its space complexity is

where

is the number of observations.

In this post we will see how to eliminate that requirement and perform the Gauss-Newton algorithm in a one-pass streaming algorithm with space complexity effectively .

1. The heart of the matter

Notice that we can rewrite the entry of

as follows

So if we know ,

, and

, then we can compute the matrix entry for arbitrary

. We can scan the data once, compute these three numbers and then just use the formula above to compute the matrix entry while iterating through the Gauss-Newton algorithm. Once these numbers are computed, there is never a need to look at the data again.

A similar situation arises for all og the matrix entries. Before proceeding, let’s introduce some new notation that will make the sequel easier to follow, easier to code, and easier to generalize.

2. Notation

To think clearly about the calculations here, we need to stop thinking about 3-vectors and start thinking about -vectors. We will build on the notation introduced in post 1 of this series and define three new

-vectors. For an axis

define

This is the -vector of all observations for that axis. Next define

This is the -vector of the squares of the observations along this axis. Finally, it will be convenient to use a vector of all ones:

For any pair of -vectors

,

, we define the inner product as usual

With this notation, we can rewrite the formula in the last section as

3. Rewriting the matrix entry formulas

We will rewrite each of the formulas derived in post 2 in terms of these -vectors. It is a worthwhile exercise to verify these.

For

for

and for

To we write similar expressions for the entries of , note that

So for

and for

These expressions may look tedious, but they are just straightforward algebra. If we scan the observation data and compute the following statistics for all

we will have all of the information we need to compute our matrix entries:

In fact, the situation is even better. Notice that

So we do not need to store . Also the arrays

and

are symmetric, allowing us to store just 6 of the 9 entries. Altogether, we need to store 24 numbers. Of course we also need to store

, the number of observations, for a total of 25.

4. Putting it into code

Implementing this is straightforward even if it demands a bit of care. To see this implemented, look at the file BestSphereGaussNewtonCalibrator.cpp in muCSense. Here is a quick overview of what to look for.

- The arrays of statistics are private members of the

BestSphereGaussNewtonCalibratorclass and are declared in BestSphereGaussNewtonCalibrator.h

- The statistics are all accumulated in the function in the method

BestSphereGaussNewtonCalibrator::update(BestSphereGaussNewtonCalibrator.cpp). - The calibration matrices are computed in the function in the method

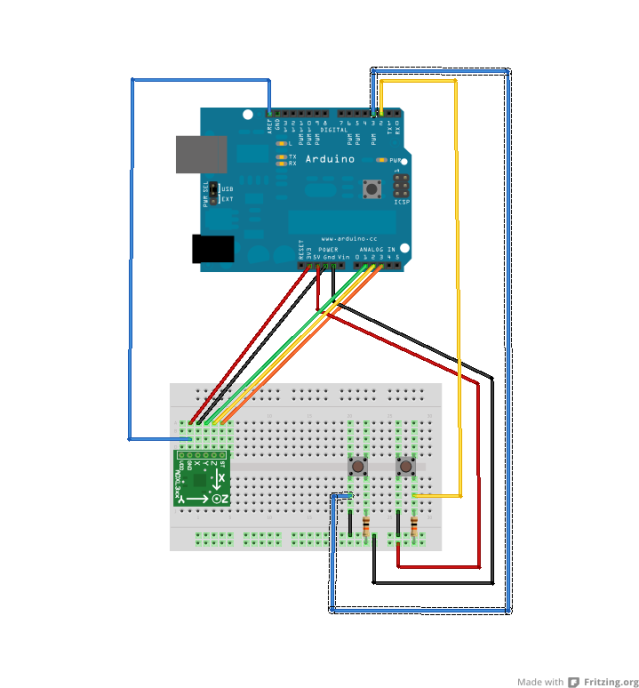

BestSphereGaussNewtonCalibrator::computeGNMatrices(BestSphereGaussNewtonCalibrator.cpp).In muCSense this sphere fitting procedure is used to calibrate “spherical sensors” such as magnetometers and accelerometers. At present, the library specifically supports the inertial sensors on SparkFun’s 9DoF Sensor Stick: the ADXL 345 accelerometer, HMC 5843 magnetometer, and the ITG 3200 gyroscope. To use this code to calibrate a different accelerometer or magnetometer, you just need to define a new subclass of Sensor — see ADXL345.h/ADXL345.cpp for an example — then follow this example sketch.

5. What’s next.

The code in muCSense works, but needs to be optimized. As it is, a simple sketch using this calibration algorithm takes up 20K of the 32K available on an Arduino Uno. The sums and products have a clear structure that I have not attempted to exploit at all. Using this structure should allow a valuable reduction in compiled code size. I will work on this, but would love to hear from anyone else who has some ideas.

It is interesting to note that this algorithm has a clear generalization to any residual function which is a polynomial of the observation vector. It works for higher dimensional observations and for higher degree polynomials. In particular, it can be adapted to handle linear maps with shear, something I plan to implement soon. The process I walked through here should be abstracted to build a system that takes a polynomial residual function as input and determines the statistics and computations needed in the background, optimizing the tedious arithmetic and saving us from writing this sort of code.